I did try messing with the hook order but it’s already as early as it can be.

I did try messing with the hook order but it’s already as early as it can be.

How would bios change how linux loads usb devices?

It works fine in bios and bootloader. This only happens during boot

Reeder tablet that came as a promotion with something. Could barely keep a single app open, sometimes. At some point low spec just means e waste

Noticed that wayland with different dpi monitors is slightly less broken now.

I had thought it was about the color profile because with hdr disabled from system settings, enabling the built in color profile desaturates colors quite a bit and does some kind of perceived brightness to luminosity mapping that desaturates bright / dark hdr content even more. Although I don’t think that’s the cause of my problems anymore.

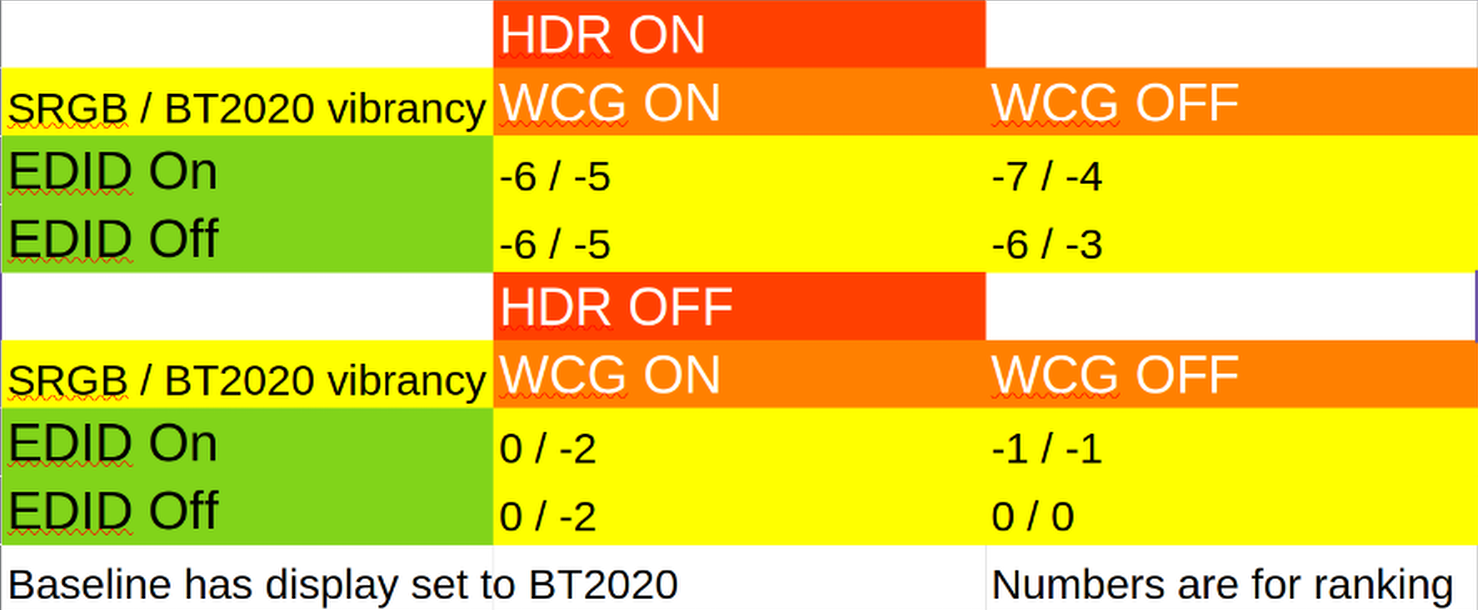

Thanks to your tip about kscreen-doctor, I could try different combinations of hdr / wcg / edid and see how the colors look with different combinations:

I think there must be something wrong with my screen since the hdr reduces saturation more than anything else. Anyways, thanks for the good work

Edit: Tried this with an amd gpu. hdr+wcg works as expected without muted colors. hdr without wcg still significantly desaturates colors, so I guess that’s a monitor bug. Now to figure out gpu passthrough… (Edit 2: It seems to just work??)

Side note, when I turn off hdr only from kscreendoctor the display stays in hdr mode until it turns off and on again, that didn’t happen with nvidia

Edit 3: Found something weirder… Hdr colors are muted on nvidia gpu and seems vibrant with the amd igpu. If I plug the monitor to the motherboard (amd), enable hdr, then unplug and plug it into the nvidia gpu, the colors are still vibrant??? I can disable and enable hdr again and again and they aren’t affected. They’re even fine when hdr is enabled without wcg??? But if I fully turn off the monitor and back on they once again become muted with hdr. Weird ass behavior

You can’t with hdr as I said. Check it yourself if you want

This doesn’t mention the part where if you enable hdr, it sets the color profile to edid without an option to change it, which for my monitor makes everything very desaturated even in comparison to srgb mode (with no color profile)

What would it do?

Edit:piping it no less

I have an ultrasonic one. When it decides not to recognize my finger it just doesn’t. And sometimes it rumbles as if it had a wrong match while laying on the table on its own

No? Afaik vsync prevents the gpu from sending half drawn frames to the monitor, not the monitor from displaying them. The tearing happens in the gpu buffer Edit: read the edit

Though I’m not sure how valid the part about latency is. In the worst case scenario (transfer of a frame taking the whole previous frame), the latency of an lcd can only be double that of a crt at the same refresh rate, which 120+ hz already compensates for. And for the inherent latency of the screen, most gaming lcd monitors have less than 5 ms of input lag while a crt on average takes half the frame time to display a pixel, so 8 ms.

Edit: thought this over again. On crt those 2 happen simultaneously so the total latency is 8ms + pixel response time (which I don’t know the value of). On lcds, the transfer time should be (video stream bandwidth / cable bandwidth) * frame time. And that runs consecutively with the time to display it, which is frame time / 2 + pixel response time. Which could exceed the crt’s latency

BUT I took the input lag number from my monitor’s rtings page and looking into how they get it, it seems it includes both the transfer time and frame time / 2 and it’s somehow still below 5 ms? That’s weird to me since for that the transfer either needs to happen within <1 ms (impossible) or the entire premise was wrong and lcds do start drawing before the entire frame reaches them

Although pretty sure that’s still not the cause of tearing, which happens due to a frame being progressively rendered and written to the buffer, not because it’s progressively transferred or displayed

I nominate chorus for the AA showdown

Also, was outer worlds considered for this one? I hear it’s also a Bethesda game in space, would be interesting to compare it to starfield

I wrote ml. If you didn’t misread, what are you talking about?

But ml is a type of ai. Just because the word makes you think of androids and skynet doesn’t mean that’s the only thing that can be called so. Personally never understood this attempt at limiting the word to that now while ai has been used for lesser computer intelligences for a long time.

Don’t let Linus see that, my memory isn’t that reliable

550 is the stable driver… This only started happening in a new kernel version and iirc from some thread it is a bug in the kernel. If you don’t have the issue already just not updating kernel until this gets fixed would be enough

You can check with sudo btrfs subvolume list /

They are different subvolumes in the same filesystem but df doesn’t show subvolumes

This worked. Apparently I had all the usb devices connected to the same controller and it seems linux initialises them controller by controller. Thanks